Unknown Knowns: The Perils of Blind Spots

When demonstrating due diligence, it’s not just what you know and who you know, it’s what you don’t know that you know.

Donald Rumsfeld’s infamous 2002 quote provoked much discussion: “…as we know, there are known knowns; there are things we know we know. We also know there are known unknowns; that is to say we know there are some things we do not know…there are also unknown unknowns – the ones we don't know we don't know. It is the latter category that tend to be the difficult ones.”

Rumsfeld’s comment emphasised the importance of unforeseen (and possibly unforeseeable) risks. However, he did not speak about a potential fourth category, the ‘unknown knowns.’

T. E. Lawrence wrote of the ideal military organisation having “perfect 'intelligence,' so that we could plan in certainty.” In practice, this is essentially impossible. An executive’s difficulty in knowing what is happening throughout their organisation increases exponentially with the organisation’s size. This gives rise to many well-known and resented management frameworks, including risk and quality systems, communication protocols, timesheets, and so on.

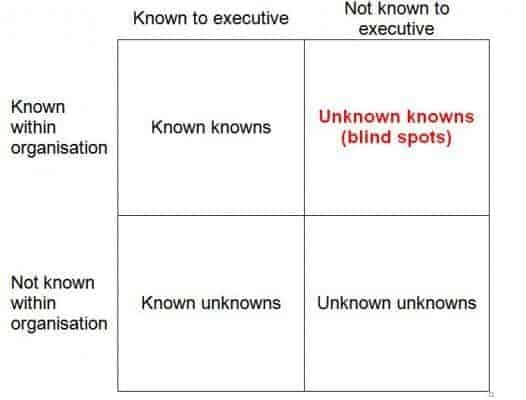

A heuristic technique known as the Johari Window considers the intersection of a person’s state of knowledge with that of their surrounding community. Adapting this to a organisation’s executive’s point of view gives the following Rumsfeldian categories:

These ‘unknown knowns,’ or blind spots, may take a range of forms, including different solutions implemented in different departments for similar problems. At best this is inefficient, and at worst it may demonstrate that, in the case of something going badly wrong, the organisation had a different and clearly reasonable way to address the issue but failed to do so. In this way, recognised good practice may be known and understood within an organisation but not communicated to those who would fund its implementation. A situation may occur in which something goes wrong and good practice measures could have prevented it. This leaves organisations (and relevant managers) open to charges of negligence.

Blind spots may also manifest in the form of operations teams using workarounds to bypass inefficient or perceived low value systems imposed by management. These may arise from benevolent or benign intentions, but can also involve the deliberate flouting of rules or laws, as seen in the recurring ‘rogue financial trader’ scandals.

These scenarios occur again and again in large organisations, and regularly appear in high-profile crisis management media stories. A prominent recent case is Volkswagen’s 2015 diesel emissions controversy. Volkswagen’s CEO admitted that from 2009 to 2014 up to eleven million of its diesel cars (including 91,000 in Australia) had deliberate “defeat” software installed.

This software reduces engine emissions (and hence performance) when it detects the vehicle is undergoing regulatory emissions testing such as that conducted by the United States Environmental Protection Agency (EPA). During normal driving, the software increases vehicle performance (and emissions.) This approach was used to have vehicles approved by US EPA regulators while still marketing the cars as high performance vehicles.

Following the admission, Volkswagen suspended sales of some models and stated that it had set aside 6.5 billion euros to deal with the issue and its fallout. The CEO resigned, and a new chair was elected to the supervisory board. Dozens of lawsuits have since been filed against the company, including a US$61 billion suit from the US Department of Justice.

One investigation into this matter noted sociologist Diane Vaughan’s investigation into the 1986 Challenger space shuttle disaster, citing her concept of “normalisation of deviance.” The investigation stated that, rather than explicit or implicit executive direction to game the emissions testing regime, “…it’s more likely that the scandal is the product of an engineering organisation that evolved its technologies in a way that subtly and stealthily, even organically, subverted the rules.”

This can occur through ongoing ‘tweaking’ by system engineers, with no single change considered enough to break ‘the rules’ but with the accumulation over time enough to go past approved limits. Workforce turnover obviously plays a role in this, with the gradually evolving status quo more likely to be accepted than challenged by each new employee. The Volkswagen board chairman’s statement that “we are talking here not about a one-off mistake but a chain of errors” supports this view, with the German investigation’s chief prosecutor subsequently stating that “no former or current board members” were under investigation.

In almost all of these scenarios, it is eventually found that someone, somewhere in the organisation, was aware of the issue and had misgivings about the organisation’s course of action. And when this knowledge becomes public, it often does serious damage to the organisation's reputation.

One approach to tease out these often complex and hidden views, decisions and knowledge is through the ‘generative interview’ technique. This is based on British psychologist James Reason’s classifications of organisational culture. These run on a spectrum from pathological, through bureaucratic, to generative. These classifications signify a range of organisational cultural characteristics. Three key indicators for executive blind spots relate to failure and new ideas; their response to failure, their response to new ideas, and their attitude to issues within the organisation.

Pathological organisations punish failure (motivating employees to conceal it), actively discourage new ideas, and don’t want to know about issues. Bureaucratic organisations provide local fixes for failures, think that new ideas often present problems, and may find out about organisational issues if staff persist in speaking out. Generative organisations implement far-reaching reforms to address failures, welcome new ideas, and actively seek to find issues.

Generative interviews adopt a communication approach with characteristics of a generative organisational culture. They aim to gain the insight of ‘good players’ at a range of levels within an organisation. They are conducted in the spirit of enquiry rather than audit. That is, they are used to look for views, ideas and solutions rather than just for problems or non-conformances, but they listen carefully to issues raised. If an interesting idea or view is common to multiple levels of an organisation, this indicates that it should be further investigated.

When trying to demonstrate diligence in executive decision-making, this harnessing of knowledge at all levels of the organisation is critical. Without it, senior decision-makers may overlook well-known critical issues, and reasonable precautions may be missed. In a post-event investigation, it is difficult to demonstrate diligence if someone within the organisation knew about what could have gone wrong or how to prevent it but could not communicate this to those with the power to address it.

This approach is not a panacea for identifying issues faced by an organisation. However, it helps executives focus on, identify and address their organisational blind spots. In this manner it helps answer a key aspect of due diligence in decision-making: what are our unknown knowns?

This article first appeared on Sourceable.